Reading Time: 3 minutes

As a company focused on optimization through experimentation, we run a lot of A/B tests. We test copy changes, subject lines, male vs. female senders, form vs. bot, etc. It’s very informative to put two things against each other to see which comes out on top. But this week, while running regressions on our most recent experiment, we came to find that sometimes A vs. B doesn’t actually matter.

Campaign Overview

We created a new customer onboarding campaign for one of our customer success partners. The goal of the campaign is to help new account owners actively utilize the product. The campaign includes one welcome email followed by a series of eight in-product messages designed to introduce the new users to a variety of product features. The feature set was selected by our client as user events that will allow customers to get the most out of the product and fully adopt it into their daily activities. There is also a one-off reactivation email sent to users enrolled in the campaign who were not seen in the product for more than four consecutive business days.

Our initial campaign started in August and we are currently in the process of analyzing the results from version three of the campaign. We plan to create one more version of testing before a finalized version five is launched then continuously monitored.

The Experiment

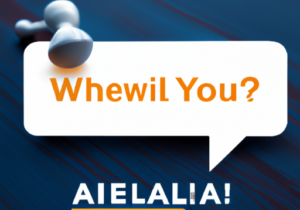

For each of the emails and in-product messages, we created both an A and a B version. Here is a comprehensive list of the A/B tests we ran for different messages:

- Subject line

- Email copy

- Message body copy

- Button copy

- Male vs. female sender

- CTA copy (call to action)

- Including video

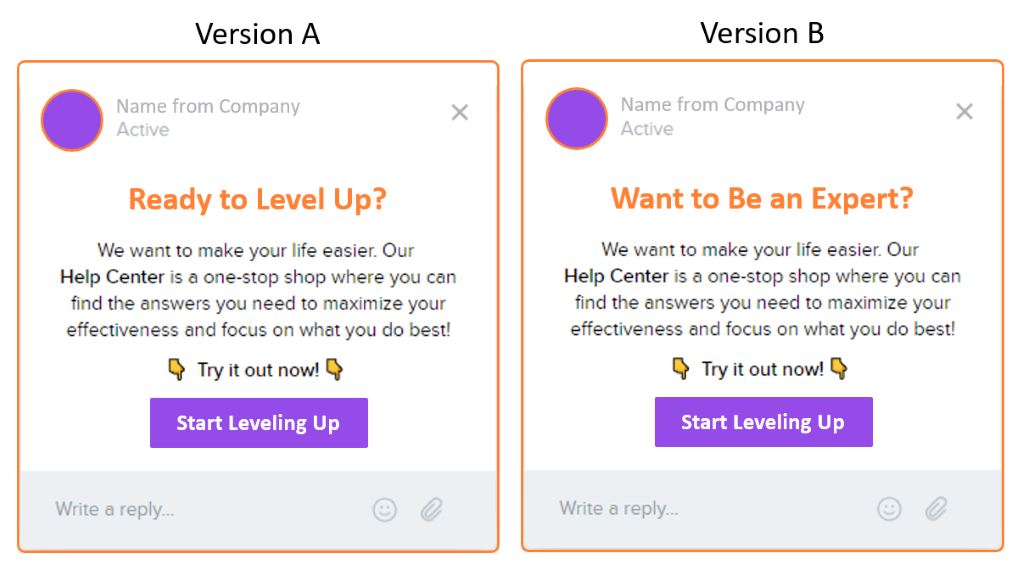

This is one example message with an A/B test on the message subject line:

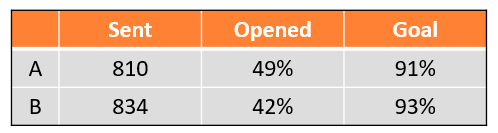

For each message we carefully tracked message sends, open rate, and goal completion rate. The goal was customized to each message and related to the activity being promoted.

The Results

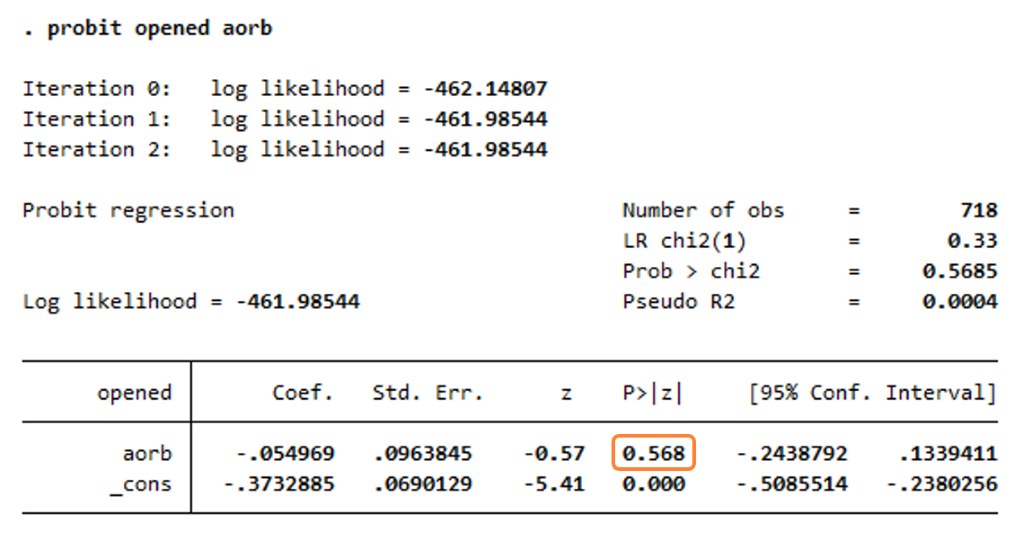

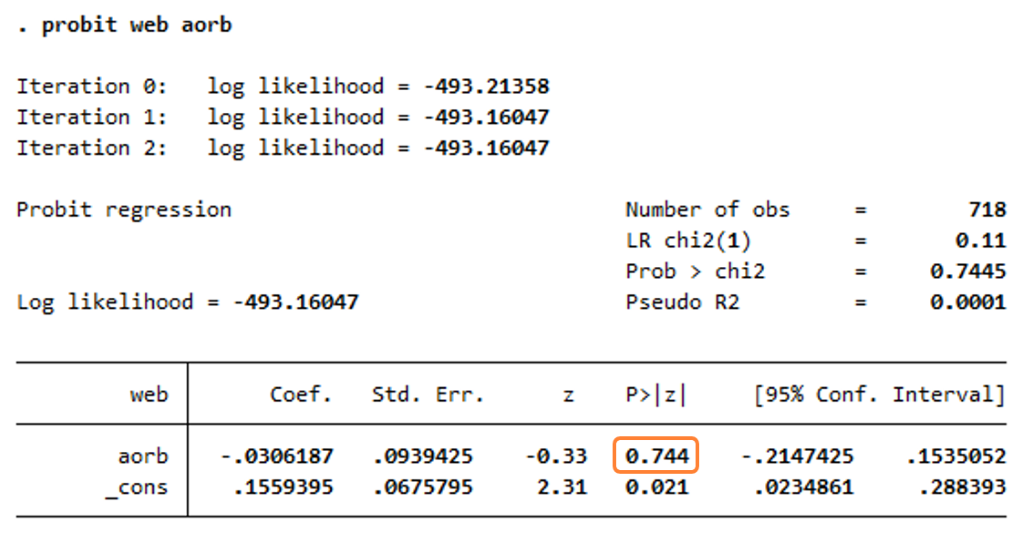

For each of the ten messages we reformatted the data and ran regressions to analyze the difference between message A and message B. We used message A or B as the explanatory variable (also referred to as independent variable). Message opens and completion of the goal were the dependent variables.

Regression 1: Message open as the dependent variable

Regression 1: Goal completion as the dependent variable

When the p value is high as seen in these regressions, the results of the analysis are not statistically significant. Out of all twenty regressions, none of our analyses showed statistical significance.

Takeaways

The typical assumption is that different versions of the same message will impact open rates and customer adoption of the product. However, this hypothesis proved incorrect in this study as there was no statistical significance for any regression comparing the A and B bot messages. Through running experiments like these, marketers realize the necessity of knowing what is relevant and what is not. Creating good copy that resonates with customers and communicates a valuable message is relevant; small differences and tweaks to subject lines and message copy is not. A better understanding of what impacts overall results helps focus time and attention to value adding activities and decrease wasting time or resources.

In the next version of the campaign we plan on adjusting our experimentation tactic by A/B testing more substantial differences between messages. For example, instead of testing word choice, we will test a body copy focused on the result of utilizing a specific feature to a second version that uses the copy to explain how to use the feature. We also plan on testing if professional vs. causal bot language leads to better goal completion.

Conclusion

The expectation was that analyzing the data would inform which message is better, A or B. Instead the regressions show that it doesn’t matter. Sometimes test results do not answer the question we set out to answer, but instead answer questions we never thought to ask and guide the process forward with a better focus on what is truly valuable.

READ MORE

Start seeing your Buyers' signals

Signals is helping companies automate, grow, and close sales pipeline with industry-leading predictive intent scoring, lead generation, and real-time engagement.